Beyond Decibels — The Design, Science, and Emotion of Modern Sound

“Good sound isn’t just heard — it’s felt.”

For decades, sound was measured in watts, hertz, and decibels. The louder, the better — or so we thought. But in 2025, the idea of “good audio” has gone far beyond volume and clarity.

Today, the best sound isn’t defined by specs — it’s defined by emotion, design, and personalization.

From AI-driven tuning to psychoacoustic soundscapes, the new wave of modern sound design blends science with feeling, hardware with identity, and data with creativity.

It’s not just about what you hear — it’s about how sound makes you feel.

🔬 The Science — Engineering Sound Beyond the Numbers

Modern audio engineering isn’t about boosting bass or sharpening treble anymore — it’s about understanding the human brain.

Our ears capture sound, but it’s the brain that interprets it.

That’s where psychoacoustics — the science of how we perceive sound — has become the foundation of audio innovation.

Companies like Sony, Apple, and Dirac use machine learning to map how listeners react to certain frequencies, spatial cues, and tonal warmth.

The result? Systems that adapt in real time — shaping the listening experience to you, not just to the device.

Take Apple’s Adaptive Transparency or boAt’s AI Hearing Engine. Both learn your sound preferences dynamically — adjusting EQ curves, active noise cancellation, and even ambient awareness levels based on your surroundings.

This isn’t engineering for perfection — it’s engineering for perception.

And that’s a subtle but revolutionary shift.

🎨 The Design — How Aesthetics Shape Audio Experience

In the modern sound era, design speaks before sound does.

The way headphones curve, the materials used, and even the click of a volume wheel — all contribute to how we perceive quality.

Minimalism once ruled premium audio design — black, chrome, and brushed aluminum. But in 2025, design is becoming more expressive, emotional, and human.

Brands like Bang & Olufsen, Nothing, and Noise understand that sound products are now wearable identity markers. They blend audio engineering with fashion sensibility, giving listeners more than functionality — giving them presence.

- Nothing’s transparent aesthetic tells a story of openness and precision.

- boAt’s Street Edition connects youth culture with bold, unapologetic energy.

- Sony’s LinkBuds series balances comfort with fluid, organic design for everyday life.

Every shape, color, and finish has intent — because in today’s market, sound is no longer hidden behind speakers; it’s worn, displayed, and shared.

Design now translates emotion before the music even starts.

💭 The Emotion — Why Sound Hits Deeper Than We Realize

Music has always been emotional, but sound design is now engineered to feel emotional.

Every frequency can trigger a psychological or physical response. Low frequencies create depth and power, mids carry warmth and human connection, while highs bring air and clarity.

Modern sound engineers use this knowledge to craft emotional sound signatures.

Streaming platforms like Spotify and Apple Music have even begun using emotional tagging — letting AI match audio dynamics with user moods.

And then there’s AI adaptive audio, where your earphones subtly change their tone depending on your activity — workout, commute, or sleep.

Your sound now understands you.

A study by the Institute of Sound Research (2024) found that consistent exposure to personalized, emotionally aligned sound profiles improved focus and reduced stress by 23%.

In short: sound isn’t background anymore — it’s biological design.

⚙️ From Hardware to Harmony — The Role of AI in Modern Sound Design

The fusion of AI and acoustic science has made personal listening more intuitive than ever.

AI-driven sound systems analyze ambient noise, your heart rate (via wearables), and even time of day to tune frequencies dynamically.

It’s the same idea behind Bose’s Adaptive EQ, Sony’s Headphones Connect, and Noise’s SenseAdapt technology — where machine learning refines sound to match you.

In professional environments, AI also helps creators. Tools like Adobe Podcast AI or LANDR Mastering use adaptive algorithms to mix and master soundtracks based on emotional tone, genre, and listener intent.

We’ve entered the era of co-creation between humans and algorithms.

Your headphones are no longer passive receivers — they’re thinking instruments.

🧠 The Hidden Layer — Psychoacoustics Meets Neuroscience

Psychoacoustics isn’t just an engineering discipline anymore — it’s a bridge between sound and neuroscience.

Neural researchers have discovered how specific sound patterns can trigger calmness, motivation, or even empathy.

That’s why meditation apps like Headspace and Calm now use binaural sound layers designed to guide brainwave patterns — blending frequency science with psychology.

Brands like Sennheiser and Bose are even testing neural feedback in future headphones — using biometric sensors to track emotional response in real time.

Imagine sound that senses your mood and heals it.

In essence, the science of sound is becoming the science of self-regulation — redefining how we experience calm, energy, or emotion through frequencies.

🎧 The Culture Shift — From Utility to Identity

Once, headphones were tools — now they’re statements.

The shift is cultural. Sound is no longer just consumed; it’s curated, displayed, and shared.

On Instagram, sound aesthetics — like open-ear designs, color palettes, and playlists — have become as personal as fashion.

This movement marks a turning point where listening becomes lifestyle.

Wearing your favorite brand isn’t about price — it’s about philosophy.

- Nothing represents transparency.

- boAt symbolizes energy and Indian pride.

- Bose reflects quiet luxury.

Sound, once invisible, now defines identity in a way few technologies ever have.

It’s culture, community, and confidence — wrapped around your ears.

🔊 Modern Soundscapes — Blending Art with Algorithm

The modern sound experience is no longer purely mechanical or digital — it’s hybrid artistry.

Every ambient tone, UI click, or notification chime you hear is designed by teams of sound artists.

These micro-sounds — called sonic branding — have become the emotional signatures of modern technology.

From Apple’s startup chime to Netflix’s “ta-dum”, these sounds trigger emotional memory instantly.

In 2025, brands are evolving this further — creating adaptive sound logos that shift tone depending on your device or time of day.

Sound is no longer an accessory to design — it is design.

And the best examples blur the line between art and algorithm.

🌍 Global vs Local — The Future of Sound Identity

As the world globalizes sound, local audio cultures are pushing back.

In Japan, audio firms fine-tune products for minimal environmental distortion — respecting silence as a design element.

In India, brands like Noise, boAt, and Boult are designing for bass-heavy, rhythm-rich music traditions.

Meanwhile, European companies emphasize tonal neutrality and cinematic realism.

This diversity is proof that modern sound isn’t universal — it’s contextual.

It reflects the culture it’s built for.

And as AI becomes more localized, your next earphones might not just know you — they’ll know where you’re from.

💡 Sustainability and Sound — The Green Frequency

Beyond decibels and design, a quieter revolution is taking shape — eco-acoustics.

Brands like Urbanears and boAt are exploring recycled materials, biodegradable packaging, and solar-powered charging cases.

Consumers, especially Gen Z, are beginning to associate clean sound with clean ethics.

In 2025, sustainability has become the new standard of “premium.”

It’s not just about how your device sounds — it’s about what it stands for.

🎯 The Emotional Core — Why Sound Still Feels Human

Despite all the AI, data, and algorithms, the emotional truth of sound remains the same:

We crave connection.

Every vibration, every note, every pause — is part of a universal language that doesn’t need translation.

Modern sound design simply gives that emotion new tools to travel farther and hit deeper.

When your playlist matches your mood before you realize it, when a movie soundtrack gives you goosebumps, or when silence itself feels designed — that’s the point where sound stops being technology and becomes human again.

🚀 The Road Ahead — Toward Sonic Intelligence

By 2030, we’ll likely see:

- Emotion-aware earbuds that detect mood changes and adapt in real time.

- Neural sound feedback systems for health and therapy.

- Spatial audio ecosystems integrated into AR and mixed reality platforms.

The future of sound will not be louder — it will be smarter, softer, and infinitely more personal.

The new goal isn’t perfect audio — it’s perfect resonance.

💬 The Vibetric Verdict

Modern sound is no longer a competition of decibels — it’s a dialogue between design, science, and soul.

It’s where frequency meets feeling, and technology becomes touch.

At Vibetric, we believe this evolution marks the next era of emotional design — where every waveform carries identity, culture, and purpose.

“Sound isn’t just something you hear. It’s something that hears you back.”

🔗 Stay in the Loop

We help you choose smarter, not louder.

- Follow @vibetric_official on Instagram for insights into sound innovation, design psychology, and India’s growing role in global audio culture.

- Bookmark Vibetric.com for deep dives, expert breakdowns, and community-tested recommendations.

No fluff. No bias. Just honest performance — the Vibetric way.

💬 What’s your take on this?

The comment section at Vibetric isn’t just for reactions — it’s where creators, thinkers, and curious minds exchange ideas that shape how we see tech’s future.

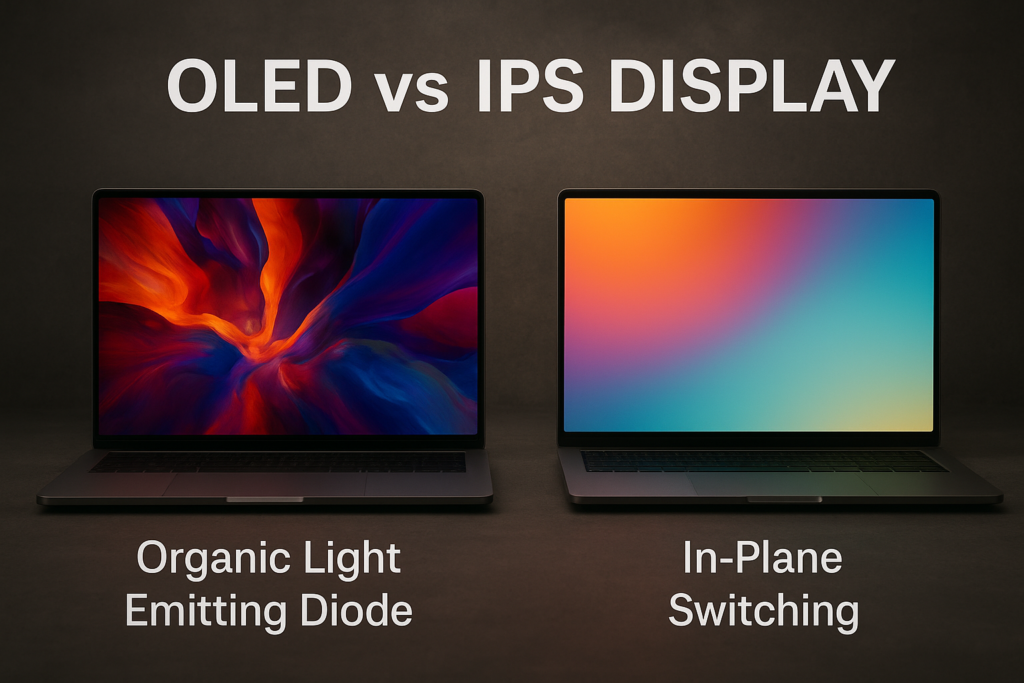

OLED vs IPS — Which Display Lasts Longer?

OLED vs IPS — Which Display Lasts Longer? Laptop displays in 2025 have reached a crossroads: OLED panels promise cinematic visuals, while

Why Battery Life Varies So Much Across Regions

Why Battery Life Varies So Much Across Regions Laptop users often assume battery life is a fixed, objective figure determined by watt-hour